Army develops a novel computational model that allows robots to ask clarifying questions to Soldiers

ADELPHI, Md. — Army researchers developed a novel computational model that allows robots to ask clarifying questions to Soldiers, enabling them to be more effective teammates in tactical environments.

Future Army missions will have autonomous agents, such as robots, embedded in human teams making decisions in the physical world.

One major challenge toward this goal is maintaining performance when a robot encounters something it has not previously seen — for example, a new object or location.

Robots will need to be able to learn these novel concepts on the fly in order to support the team and the mission.

“Our research explores a novel method for this kind of robot learning through interactive dialogue with human teammates,” said Dr. Felix Gervits, researcher at the U.S. Army Combat Capabilities Development Command, known as DEVCOM, Army Research Laboratory. “We created a computational model for automated question generation and learning. The model enables a robot to ask effective clarification questions based on its knowledge of the environment and to learn from the responses. This process of learning through dialogue works for learning new words, concepts and even actions.”

Researchers integrated this model into a cognitive robotic architecture and demonstrated that this approach to learning through dialogue is promising for Army applications.

This research represents the culmination of a multi-year DEVCOM ARL project funded under the Office of the Secretary of Defense Laboratory University Collaboration Initiative, or LUCI, program for joint work with Tufts University and the Naval Research Laboratory.

In previous research, Gervits and team conducted an empirical study to explore and model how humans ask questions when controlling a robot. This led to the creation of the Human-Robot Dialogue Learning, or HuRDL, corpus, which contains labeled dialogue data that categorizes the form of questions that study participants asked.

The HuRDL corpus serves as the empirical basis for the computational model for automated question generation, Gervits said.

The model uses a decision network, which is a probabilistic graphical model that enables a robot to represent world knowledge from its various sensory modalities, including vision and speech. It reasons over these representations to ask the best questions to maximize its knowledge about unknown concepts.

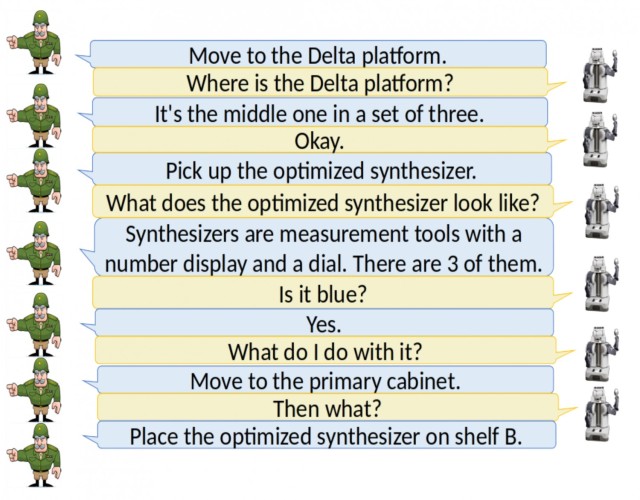

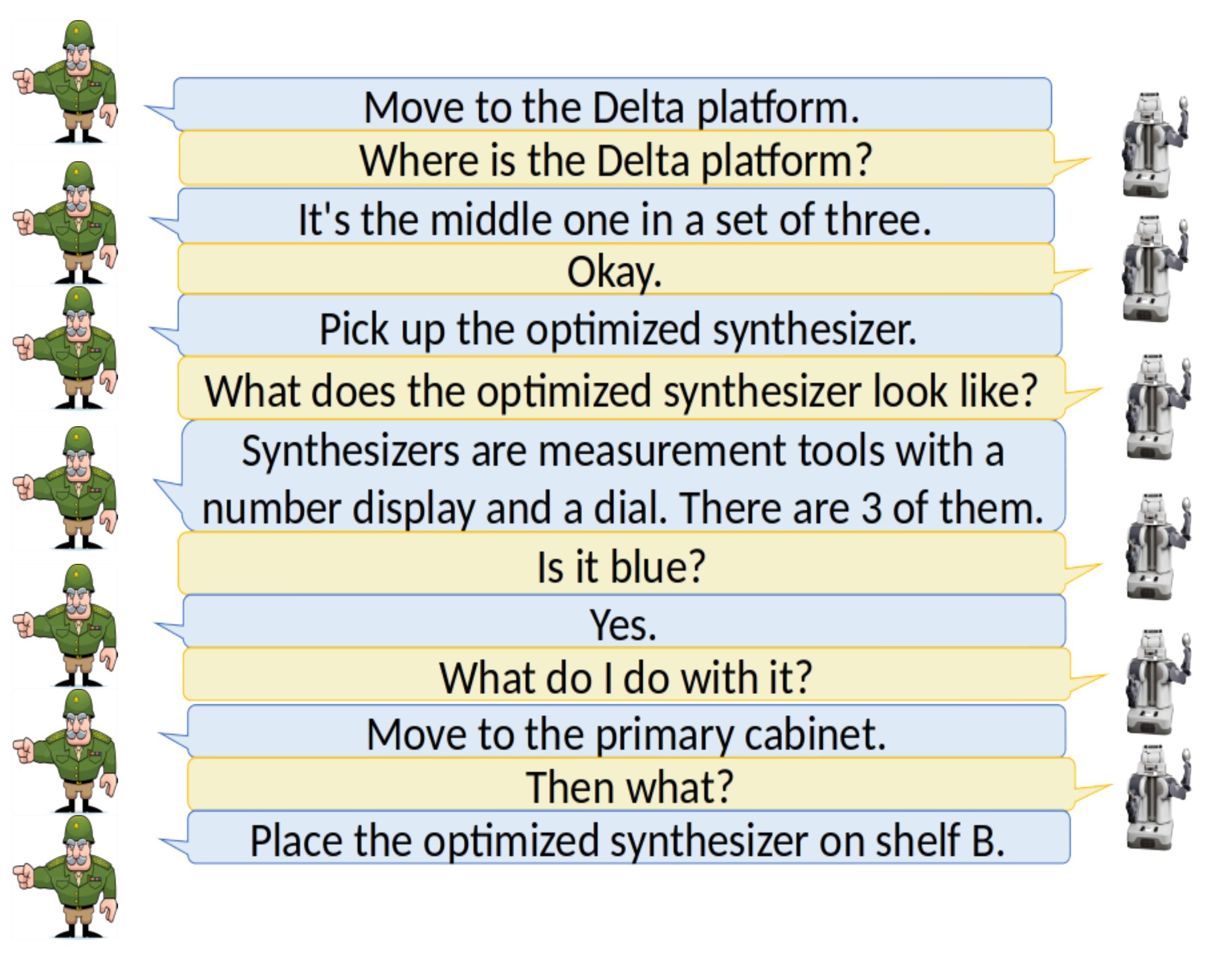

For example, he said, if a robot is asked to pick up some object that it has never seen before, it might try to identify the object by asking a question such as “What color is it?” or another question from the HuRDL corpus.

The question generation model was integrated into the Distributed Integrated Affect Reflection Cognition, or DIARC, robot architecture originating from collaborators at Tufts University.

In a proof-of-concept demonstration in a virtual Unity 3D environment, the researchers showed a robot learning through dialogue to perform a collaborative tool organization task.

Gervits said while prior ARL research on Soldier-robot dialogue enabled robots to interpret Soldier intent and carry out commands, there are additional challenges when operating in tactical environments.

For example, a command may be misunderstood due to loud background noise, or a Soldier can refer to a concept to which a robot is unfamiliar. As a result, Gervits said, robots need to learn and adapt on the fly if they are to keep up with Soldiers in these environments.

“With this research, we hope to improve the ability of robots to serve as partners in tactical teams with Soldiers through real-time generation of questions for dialogue-based learning,” Gervits said. “The ability to learn through dialogue is beneficial to many types of language-enabled agents, such as robots, sensors, etc., which can use this technology to better adapt to novel environments.”

Such technology can be employed on robots in remote collaborative interaction tasks such as reconnaissance and search-and-rescue, or in co-located human-agent teams performing tasks such as transport and maintenance.

This research is different from existing approaches to robot learning in that the focus is on interactive human-like dialogue as a means to learn. This kind of interaction is intuitive for humans and prevents the need to develop complex interfaces to teach the robot, Gervits said.

Another innovation of the approach is that it does not rely on extensive training data like so many deep learning approaches.

Deep learning requires significantly more data to train a system, and such data is often difficult and expensive to collect, especially in Army task domains, Gervits said. Moreover, there will always be edge cases that the system hasn’t seen, and so a more general approach to learning is needed.

Finally, this research addresses the issue of explainability.

“This is a challenge for many commercial AI systems in that they cannot explain why they made a decision,” Gervits said. “On the other hand, our approach is inherently explainable in that questions are generated based on a robot’s representation of its own knowledge and lack of knowledge. The DIARC architecture supports this kind of introspection and can even generate explanations about its decision-making. Such explainability is critical for tactical environments, which are fraught with potential ethical concerns.”

Dr. Matthew Marge at ARL was the principal investigator of the LUCI project with Dr. Gordon Briggs at NRL serving as co-PI. Gervits led the empirical study design and algorithmic development for the decision network. Dr. Matthias Scheutz at the Tufts University Human-Robot Interaction Laboratory was the external university collaborator and provided the DIARC architecture. Other Tufts University personnel who supported the project include Dr. Antonio Roque, Genki Kadomatsu and Dean Thurston.

When combined with other related research at ARL such as the statistical language classifier and the Joint Understanding and Dialogue Interface, or JUDI, this research supports the broad goal of developing an advanced speech interface that can be utilized in various kinds of language-enabled autonomous systems for more effective Soldier-machine interaction.

“I am optimistic that this research will lead to a technology that will be used in a variety of Army applications,” Gervits said. “It has the potential to enhance robot learning in all kinds of environments and can be used to improve adaptation and coordination in Soldier-robot teams.”

The next step for this research is to improve the model by expanding the kinds of questions it can ask.

This is not trivial, Gervits said, because some questions are so open-ended that they present a challenge to interpret the response.

For example, a question from the robot such as “What does the object look like?” can elicit a variety of responses from a Soldier that are challenging for the robot to interpret.

A workaround is to develop dialogue strategies that robots can use that prioritize automated questions that can be easily interpreted, for example, “What shape is it?”

These are not always good questions, Gervits said, so a balance needs to be struck.

While the researchers’ proof-of-concept demonstration shows this technology operating as part of a robot architecture in a virtual environment, they hope to validate it in physical environments in the near future as part of the AI for Maneuver and Mobility Essential Research Program.

“The physical world has additional challenges that robots need to overcome, including noisy sensor readings and potential for mechanical failure, and so such environments will serve as a stronger benchmark of performance,” Gervits said.

Researchers will present their findings at the 23rd ACM International Conference on Multimodal Interaction in October 2021 and publish a paper in the conference proceedings.

As the Army’s foundational research laboratory, ARL is operationalizing science to achieve transformational overmatch. Through collaboration across the command’s core technical competencies, DEVCOM leads in the discovery, development and delivery of the technology-based capabilities required to make Soldiers more successful at winning the nation’s wars and come home safely. DEVCOM Army Research Laboratory is an element of the U.S. Army Combat Capabilities Development Command. DEVCOM is a major subordinate command of the Army Futures Command.

Social Sharing